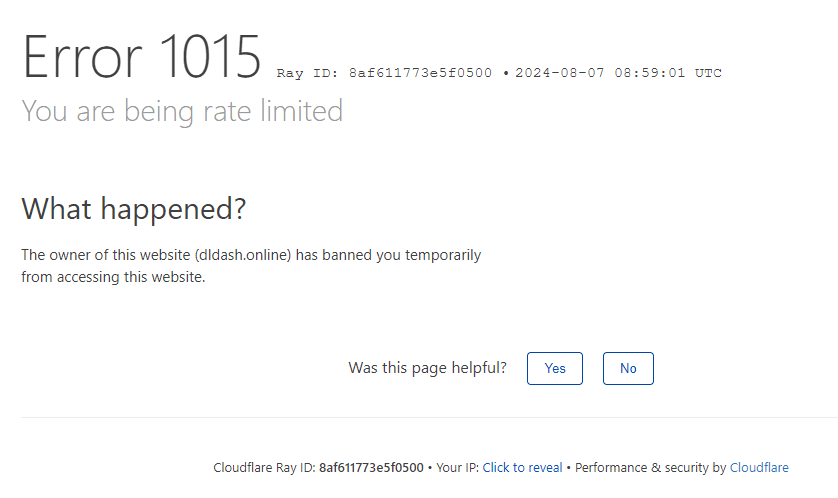

Cloudflare Error 1015: You Are Being Rate Limited

Cloudflare Error 1015 appears when users make too many requests. This error message helps protect websites from being overwhelmed. The error limits the number of requests in a short time.

What is Cloudflare Error 1015 and why does it occur?

Understanding Cloudflare's Error 1015

Cloudflare’s Error 1015 occurs when users exceed request limits. The website owner has implemented this limit to manage incoming traffic. It protects the web server from overload and security threats.

Rate limiting often affects users making too many requests quickly. Cloudflare monitors these activities to reduce risks. Limiting the number of users or bots ensures stable website performance.

Common triggers for rate limiting

Rate limiting triggers when websites detect many requests in a short time. This often occurs during automated activities like web scraping or testing. It also happens when the site’s traffic load is unusually high.

Some triggers include using cloudflare with free proxies or VPNs. The system detects these IP addresses and blocks access. Common triggers include:

- Making multiple requests in a short period.

- Traffic coming from different users or bots.

- Requests sent through unverified IPs or proxies.

How does Cloudflare's rate limiting affect web scraping?

Impact on web scraping activities

Web scraping involves gathering data from websites automatically. However, Cloudflare Error 1015 blocks these scraping attempts.

The error is thrown when scraping APIs make too many requests.

Scraping APIs often get blocked due to repeated requests. Cloudflare's measures are designed to protect the website. This impacts scrapers trying to gather data quickly.

Challenges for web scrapers and bots

Web scrapers face issues when rate limits activate. Bots can’t access the website if they trigger the rate limits.

Common challenges include:

- Frequent rate-limiting errors during high-volume scraping.

- Requests in a short period causing access issues.

- Scraping patterns that fail to get around rate limits.

Balancing scraping needs with rate limits

To avoid rate limiting, scrapers must adjust their methods. Using rotating IP addresses reduces the risk of rate-limiting errors. Implementing proper throttling can help maintain access.

Balancing scraping intervals is another strategy. Delaying requests lowers the chance of blocking. Scrapers should reduce the request load across different IP addresses.

What are the best practices to avoid Cloudflare Error 1015?

Implementing proper request throttling

Throttling manages the number of requests sent within a time frame. It prevents scraping APIs from triggering Cloudflare’s rate limits. This technique helps avoid rate-limiting errors and keeps access stable.

Throttling spreads out the requests over a longer period. This reduces the request load and helps you bypass limits. Adjusting request intervals can make scraping safer.

Using rotating IP addresses and proxies

Rotating IPs help distribute traffic load across different IP addresses. This prevents Cloudflare from detecting too many requests from one IP. It’s an effective way to get around rate limiting.

Using multiple proxies can reduce the request load. Proxies change the IP address for each request. This method helps avoid being blocked by Cloudflare’s measures. Steps to use rotating IPs include:

- Employing a new IP address for each request.

- Using a VPN or proxy service.

- Adjusting the scraping API to rotate IPs.

Can you bypass Cloudflare Error 1015 legally and ethically?

Legal considerations for bypassing rate limits

Legally, bypassing rate limits can lead to potential issues. Always read the target website’s terms of use before scraping.

Unauthorized scraping can result in bans or legal action.

Websites protect their data by implementing rate limits. Bypassing these rules without permission may violate terms. It’s crucial to follow legal guidelines while scraping.

Ethical approaches to web scraping

Ethical scraping respects rate limits and data access rules. Use official APIs provided by the target website. This allows legal access to data while complying with limits.

Ethical scraping also involves notifying website owners. Open communication helps establish trust and understanding. Ethical methods include:

- Using a scraping API like OpenAI’s to manage requests.

- Ensuring HTTP header rotators are in place.

- Avoiding scraping without consent from the website owner.

What role do APIs play in preventing Cloudflare Error 1015?

Advantages of using official APIs

Official APIs offer legal data access with structured request limits. They are designed to handle more web requests than traditional scraping. APIs have rate limits, but they are often more lenient than Cloudflare’s.

Using an official API helps avoid triggering rate-limiting errors. This approach ensures stable access without violating rules. APIs reduce the request load and maintain consistent data flow.

Exploring web scraping APIs as alternatives

Web scraping APIs are designed for structured data collection. They use strategies like IP rotation and throttling to avoid limits. These APIs help gather data at scale without triggering blocks.

Using a scraping API can improve data gathering efficiency. It distributes requests across different IP addresses. This helps prevent errors like Cloudflare Error 1015.

How can developers troubleshoot and resolve Cloudflare Error 1015?

Analyzing error messages and codes

When faced with Cloudflare 1015, analyze the error codes. Understanding the error code helps resolve the issue effectively.

Adjust your scraping strategies based on the error message.

Developers need to check UA strings, cookies, and cache settings. This helps identify what triggers the rate limit. It’s essential to adjust scraping patterns to reduce the number of requests.

Adjusting scraping strategies

Scraping strategies must adapt to prevent rate limits. Reducing requests through multiple proxies can help avoid blocks. Adjusting scraping intervals can maintain access without triggering errors.

Adjust scraping strategies by:

- Using throttling to limit requests in a short period.

- Employing HTTP header rotators for better access.

- Using different users’ credentials to distribute load.

When to contact Cloudflare support

If rate-limiting errors persist, contact Cloudflare’s support team. They can offer insights on adjusting the rate-limiting configuration. Reaching out to the support team can help resolve the issue.

What are the long-term solutions for dealing with rate-limiting errors?

Building resilient scraping infrastructure

Building resilient scraping infrastructure requires careful planning and adjustments. Effective design helps you avoid Cloudflare rate limits.

A strong infrastructure handles rate limiting configurations well. It prevents 1015 error issues thrown by Cloudflare.

Use a web scraping API to manage scraping patterns safely. The owner has implemented a rate limit to reduce server load. These limits control requests within a given time, e.g., 10 seconds.

Free proxies often trigger rate limiting errors. Consider premium proxies, which help you avoid being blocked. Ensure UA strings are correctly set to mimic real users.

| Strategy | Description |

|---|---|

| Use rotating IP addresses | Helps prevent bans by distributing requests across IPs. |

| Implement request throttling | Manages request intervals to avoid triggering rate limits. |

| Adjust scraping frequency | Keeps request frequency below the Cloudflare rate limit. |

| Employ a reliable web scraping API | Uses an API designed for safe, compliant data scraping. |

| Ensure all UA strings are correctly configured | Mimics real users and avoids detection by rate limits. |

| Regularly check if the website has banned your IP | Helps identify IP bans early to adjust strategies. |

| Switch to premium proxies when needed | Premium proxies reduce the risk of rate limiting and blocks. |

| Use CAPTCHA-solving tools | Bypasses CAPTCHAs that can hinder data scraping efforts. |

| Detect and block free proxies that trigger errors | Avoids using free proxies, which are often detected and blocked. |

| Use Cloudflare's help center to solve complex issues | Provides solutions and guidance for resolving scraping challenges and errors. |

Developing relationships with data providers

Building relationships with data providers offers reliable access to data. Providers often detect and block free proxies during scraping. Direct partnerships allow web scraping without triggering rate limits. These partnerships ensure smoother data collection from trusted sources.

Users who can log into websites can access more data. Data providers may offer special APIs for trusted users. These APIs reduce the risk of DDoS protection measures. For example, using provider-approved methods prevents blocks.

When scraping, avoid sending too many requests quickly. Working with providers can help you solve access problems. Communicate your needs to ensure compliant and consistent data flow.

Exploring alternative data acquisition methods

Using alternative methods like APIs reduces the risk of rate limits. APIs often have different rate limits than web scraping. They offer structured data access with fewer restrictions.

Other alternatives include public datasets or manual data collection. These methods prevent excessive web scraping attempts. Long-term solutions ensure stable data access and fewer blocks.

Conclusion

Handling Cloudflare Error 1015 needs careful planning and ethics. Understand rate limits to prevent access issues. Use strategies like rotating IPs and request throttling. Official APIs help maintain safe, consistent scraping.

Build relationships with data providers for reliable data access. Always use legal and ethical scraping methods. Avoid making too many requests too quickly. Follow best practices to reduce rate-limiting errors.

Scraping success depends on consistent, thoughtful approaches. Prioritize compliance to ensure long-term data access.