Python Wget: Mastering Programmatic File Downloads in 2025

Key Takeaways

- Python Wget offers superior file downloading capabilities compared to standard libraries, with features like resume support and recursive downloads

- Integration through subprocess provides reliable automation while maintaining full access to wget's advanced features

- Modern implementations should prioritize error handling and rate limiting to ensure stable downloads at scale

- Understanding both wget's strengths and limitations helps determine when to use it versus alternatives like requests

- Proper configuration and monitoring are essential for production-grade download automation

Introduction

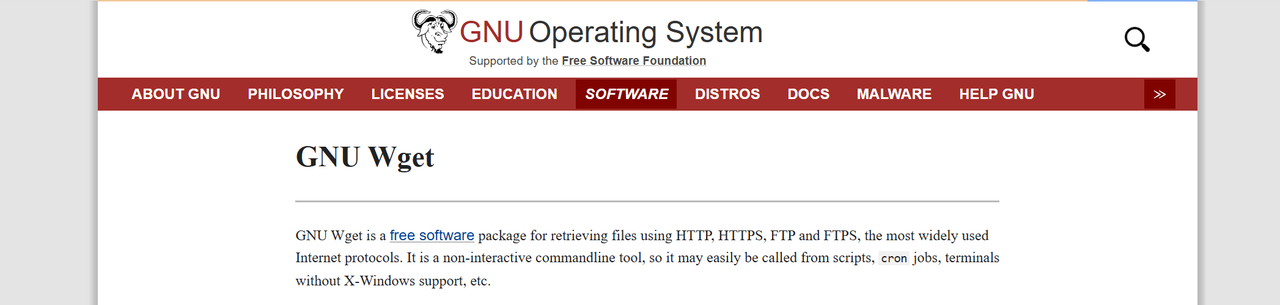

Reliable file downloading is a crucial requirement for many Python applications, from data science pipelines to automated testing systems. While Python offers several ways to download files, wget provides a particularly robust solution when integrated properly. This approach can be especially powerful when combined with other data extraction methods. This guide explores how to leverage wget's power programmatically through Python, with a focus on real-world applications and best practices.

Why Choose Wget for Python Downloads?

Understanding wget's advantages helps determine when it's the right tool for your needs. Here are the key benefits that make wget stand out:

- Resume Support: Automatically continues interrupted downloads

- Recursive Downloads: Can mirror entire websites with proper link structures

- Protocol Support: Handles HTTP(S), FTP, and more

- Bandwidth Control: Limits download speeds to prevent network saturation

- Robot Rules: Respects robots.txt directives automatically

Setting Up Python Wget Integration

Prerequisites

Before integrating wget, ensure your system meets these requirements:

- Python 3.7+ installed

- wget command-line tool installed

- Basic understanding of subprocess module

Basic Integration Pattern

Here's a robust pattern for integrating wget with Python using subprocess:

import subprocess

from typing import Dict, Union

def download_file(

url: str,

output_path: str = None,

retries: int = 3,

timeout: int = 30

) -> Dict[str, Union[bool, str]]:

"""

Download a file using wget with error handling and retries.

Args:

url: The URL to download from

output_path: Where to save the file (optional)

retries: Number of retry attempts

timeout: Seconds to wait before timeout

Returns:

Dict containing success status and message

"""

cmd = ['wget', '--tries=' + str(retries),

'--timeout=' + str(timeout)]

if output_path:

cmd.extend(['-O', output_path])

cmd.append(url)

try:

result = subprocess.run(

cmd,

check=True,

capture_output=True,

text=True

)

return {

"success": True,

"message": "Download completed successfully"

}

except subprocess.CalledProcessError as e:

return {

"success": False,

"message": f"Download failed: {e.stderr}"

}

Advanced Usage Patterns

Handling Large Files

When downloading large files, it's crucial to implement proper error handling and progress monitoring:

def download_large_file(url: str, chunk_size: int = 1024*1024) -> None:

"""

Download a large file with progress tracking and resume support.

Args:

url: The URL to download from

chunk_size: Size of each download chunk in bytes

"""

cmd = [

'wget',

'--continue', # Resume partial downloads

'--progress=bar:force', # Show progress bar

'--tries=0', # Infinite retries

url

]

process = subprocess.Popen(

cmd,

stdout=subprocess.PIPE,

stderr=subprocess.PIPE

)

while True:

output = process.stderr.readline()

if output == b'' and process.poll() is not None:

break

if output:

print(output.decode().strip())

Recursive Downloads

For mirroring websites or downloading directory structures, wget's recursive capabilities are invaluable:

def mirror_website(url: str, depth: int = 2) -> None:

"""

Recursively download a website's content.

Args:

url: The starting URL

depth: Maximum recursion depth

"""

cmd = [

'wget',

'--recursive',

'--level=' + str(depth),

'--page-requisites',

'--adjust-extension',

'--convert-links',

'--no-parent',

url

]

subprocess.run(cmd, check=True)

Best Practices and Optimization

Rate Limiting

To prevent overwhelming servers and avoid IP blocks, implement rate limiting:

from time import sleep

import random

def rate_limited_download(urls: list, min_delay: float = 1.0, max_delay: float = 3.0) -> None:

"""

Download multiple files with random delays between requests.

Args:

urls: List of URLs to download

min_delay: Minimum seconds between downloads

max_delay: Maximum seconds between downloads

"""

for url in urls:

download_file(url)

sleep(random.uniform(min_delay, max_delay))

Error Handling and Retries

Robust error handling is crucial for production environments:

from tenacity import retry, stop_after_attempt, wait_exponential

@retry(

stop=stop_after_attempt(3),

wait=wait_exponential(multiplier=1, min=4, max=10)

)

def resilient_download(url: str) -> None:

"""

Download with exponential backoff retry logic.

Args:

url: The URL to download

"""

result = download_file(url)

if not result["success"]:

raise Exception(result["message"])

Monitoring and Logging

For production systems, implement comprehensive logging and monitoring:

import logging

from datetime import datetime

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger('wget_downloads')

def monitored_download(url: str) -> None:

"""

Download with logging and basic metrics.

Args:

url: The URL to download

"""

start_time = datetime.now()

try:

result = download_file(url)

duration = (datetime.now() - start_time).total_seconds()

logger.info({

'url': url,

'success': result['success'],

'duration_seconds': duration,

'timestamp': start_time.isoformat()

})

except Exception as e:

logger.error(f"Download failed: {str(e)}")

raise

Real-World Implementation Stories

Technical discussions across various platforms reveal interesting patterns in how developers are using wget with Python in production environments. Many developers appreciate wget's simplicity for basic file downloading tasks, noting that while it requires Python installation, the actual implementation can be straightforward with minimal coding knowledge required.

A common theme among practitioners is the importance of proper error handling and retry mechanisms. Engineers frequently mention encountering issues with interrupted downloads and incomplete files, particularly when dealing with large archives or unstable connections. Many have found success by combining wget's built-in retry capabilities with custom Python wrapper functions that add additional error handling layers.

Interestingly, developers report varying experiences with different download patterns. While some users successfully employ wget for recursive downloads of entire websites, others recommend using alternative approaches for specific scenarios. For instance, when dealing with platforms like Internet Archive, some developers suggest combining wget with platform-specific APIs for more reliable results, especially when handling complex directory structures or large file sets.

The community also emphasizes the importance of understanding your use case before committing to wget. While it excels at straightforward downloads and recursive fetching, developers working with APIs or requiring fine-grained control over HTTP requests often find libraries like requests more suitable. This has led to a hybrid approach in many organizations, where wget handles bulk downloads while other tools manage more complex HTTP interactions.

When to Use Alternatives

While wget is powerful, sometimes other tools might be more appropriate:

| Use Case | Recommended Tool | Reason |

|---|---|---|

| API Integration | Requests | Better header/auth handling |

| Simple Downloads | urllib | Built-in, no dependencies |

| Large Scale Scraping | Scrapy | Better concurrency handling |

Conclusion

Python wget integration provides a robust solution for automated file downloads, especially when dealing with large files or requiring features like resume support and recursive downloads. By following the patterns and practices outlined in this guide, you can build reliable download automation systems that scale well and handle errors gracefully.