Web Scraping with Go: A Practical Guide from Basics to Production

Key Takeaways

- Go's built-in concurrency and memory management make it ideal for large-scale web scraping projects

- Colly is the most popular Go scraping framework, offering high performance (1k+ requests/sec) and built-in anti-blocking features

- Modern web scraping requires careful consideration of anti-bot measures, proxy rotation, and respectful crawling practices

- Using proper error handling and rate limiting is crucial for production-grade scrapers

- Go's ecosystem offers multiple approaches from basic HTTP clients to full browser automation

Introduction

Web scraping has become an essential tool for data-driven businesses, from market research to competitive analysis. Go (Golang) has emerged as a powerful language for building scalable web scrapers, thanks to its efficient memory management, built-in concurrency support, and robust standard library.

In this comprehensive guide, we'll explore how to build production-ready web scrapers with Go, covering everything from basic concepts to advanced techniques. Whether you're a beginner or an experienced developer, you'll learn practical approaches to common scraping challenges.

Why Choose Go for Web Scraping?

Go offers several advantages that make it particularly well-suited for web scraping:

- High Performance: Go's compilation to native code ensures fast execution

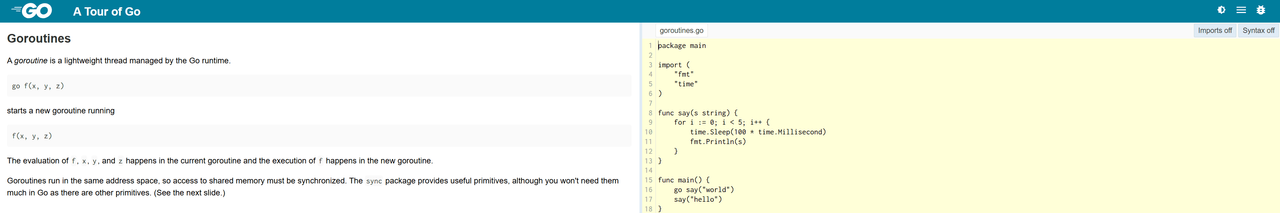

- Built-in Concurrency: Goroutines make parallel scraping efficient and manageable

- Memory Efficiency: Automatic garbage collection helps manage resources during large scraping tasks

- Rich Ecosystem: Multiple libraries and tools specifically designed for web scraping

Setting Up Your Go Scraping Environment

Prerequisites

Before we begin, ensure you have:

- Go 1.22+ installed (installation guide)

- A code editor (VS Code recommended with Go extension)

- Basic understanding of HTML and CSS selectors

Project Setup

mkdir go-scraper cd go-scraper go mod init scraper

Installing Essential Libraries

go get github.com/gocolly/colly/v2 go get github.com/PuerkitoBio/goquery

Basic Web Scraping with Go's Standard Library

Let's start with a simple example using Go's built-in packages:

package main

import (

"fmt"

"io"

"net/http"

)

func main() {

// Create HTTP client with timeout

client := &http.Client{

Timeout: time.Second * 30,

}

// Send GET request

resp, err := client.Get("https://example.com")

if err != nil {

panic(err)

}

defer resp.Body.Close()

// Read response body

body, err := io.ReadAll(resp.Body)

if err != nil {

panic(err)

}

fmt.Println(string(body))

}

Building a Production-Grade Scraper with Colly

What is Colly?

Colly is a powerful scraping framework for Go that provides:

- Clean callback-based API

- Automatic cookie and session handling

- Cache management

- Robots.txt support

- Proxy rotation capabilities

Basic Colly Scraper Structure

package main

import (

"github.com/gocolly/colly/v2"

"log"

)

func main() {

// Initialize collector

c := colly.NewCollector(

colly.AllowedDomains("example.com"),

colly.UserAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) Firefox/115.0"),

)

// Set up callbacks

c.OnHTML("a[href]", func(e *colly.HTMLElement) {

link := e.Attr("href")

log.Printf("Found link: %s", link)

})

c.OnRequest(func(r *colly.Request) {

log.Printf("Visiting %s", r.URL)

})

// Start scraping

c.Visit("https://example.com")

}

Advanced Scraping Techniques

Parallel Scraping with Goroutines

One of Go's strongest features is its concurrency model. Here's how to implement parallel scraping:

c := colly.NewCollector(

colly.Async(true),

)

// Limit concurrent requests

c.Limit(&colly.LimitRule{

DomainGlob: "*",

Parallelism: 2,

RandomDelay: 5 * time.Second,

})

// Don't forget to wait

c.Wait()

Handling Anti-Bot Measures

Modern websites employ various techniques to detect and block scrapers. Here's how to handle them:

- Rotate User Agents: Maintain a pool of realistic browser User-Agent strings

- Implement Delays: Add random delays between requests

- Use Proxy Rotation: Distribute requests across multiple IP addresses

- Handle CAPTCHAs: Integrate with CAPTCHA solving services when needed

// Example proxy rotation

proxies := []string{

"http://proxy1.example.com:8080",

"http://proxy2.example.com:8080",

}

c.SetProxyFunc(func(_ *http.Request) (*url.URL, error) {

proxy := proxies[rand.Intn(len(proxies))]

return url.Parse(proxy)

})

Real-World Example: E-commerce Product Scraper

Let's build a practical scraper that extracts product information from an e-commerce site:

type Product struct {

Name string `json:"name"`

Price float64 `json:"price"`

Description string `json:"description"`

URL string `json:"url"`

}

func main() {

products := make([]Product, 0)

c := colly.NewCollector(

colly.AllowedDomains("store.example.com"),

)

c.OnHTML(".product-card", func(e *colly.HTMLElement) {

price, _ := strconv.ParseFloat(e.ChildText(".price"), 64)

product := Product{

Name: e.ChildText("h2"),

Price: price,

Description: e.ChildText(".description"),

URL: e.Request.URL.String(),

}

products = append(products, product)

})

c.Visit("https://store.example.com/products")

// Export to JSON

json.NewEncoder(os.Stdout).Encode(products)

}

Best Practices for Production Scraping

Error Handling

c.OnError(func(r *colly.Response, err error) {

log.Printf("Error scraping %v: %v", r.Request.URL, err)

if r.StatusCode == 429 {

// Handle rate limiting

time.Sleep(10 * time.Minute)

r.Request.Retry()

}

})

Data Storage

Consider these options for storing scraped data:

- CSV files for simple datasets

- PostgreSQL for structured data with relationships

- MongoDB for flexible schema requirements

- Redis for caching and queue management

From the Field: Developer Experiences

Technical discussions across various platforms reveal interesting patterns in how teams are implementing Go-based web scraping solutions in production. Engineering teams consistently highlight Go's performance advantages, with one developer reporting a significant memory reduction after migrating from Python to Go for a service scraping over 10,000 websites monthly.

When it comes to choosing scraping libraries, Colly emerges as the community favorite for its ease of use and performance capabilities. Developers particularly appreciate its jQuery-like syntax for parsing webpage elements and its ability to easily map scraped data into Go structs for further processing. However, some engineers point out that Colly's lack of JavaScript rendering capabilities can be limiting for modern single-page applications.

For JavaScript-heavy websites, the community suggests alternative approaches. ChromeDP receives positive mentions for handling dynamic content and providing precise control through XPath selectors. Some developers have also started adopting newer solutions like Geziyor, which offers built-in JavaScript rendering support while maintaining Go's performance benefits.

Interestingly, many developers report using Go as part of a hybrid approach. Some teams prototype their scrapers in Python for rapid development, then port to Go for production deployment and enhanced performance. This workflow allows teams to leverage Python's ease of use during the exploration phase while benefiting from Go's superior resource management and concurrency in production.

Future of Web Scraping with Go

As we look ahead to 2025 and beyond, several trends are shaping the future of web scraping with Go:

- Increased focus on ethical scraping and compliance with robots.txt

- Better integration with AI/ML for intelligent scraping

- Enhanced tools for handling JavaScript-heavy websites

- Improved support for distributed scraping architectures

Conclusion

Go provides a robust foundation for building efficient and scalable web scrapers. By combining Go's performance characteristics with frameworks like Colly and following best practices, you can create reliable scraping solutions that handle modern web challenges effectively.

Remember to always respect websites' terms of service and implement rate limiting to avoid overwhelming target servers. As the web continues to evolve, staying updated with the latest scraping techniques and tools will be crucial for maintaining successful scraping operations.